Ollama

note

If your tasking AI server is locally deployed with Docker, and the target model is also running in your local environment, OLLAMA_HOST should start with http://host.docker.internal:port instead of http://localhost:port. Replace port with your actual port number.

Ollama is a powerful framework designed for deploying LLM in Docker containers. We support version 0.1.24 or later.

Requisites

To integrate a model running on Ollama to TaskingAI, you need to have a valid Ollama service first. To get started, please visit Ollama's website, or follow the simple instructions in the Quick Start.

Required credentials:

- OLLAMA_HOST: Your Ollama host URL.

Supported Models:

Wildcard

- Model schema id: ollama/wildcard

Quick Start

Deploy Ollama service to your local environment

- Download Ollama client from Ollama's website.

- Start your desired model with Ollama:

By default, the model is running on your localhost at 11434 port. You can access the model by sending a POST request to

ollama run <model_name>http://localhost:11434. For a detailed model list, please check Ollama Models.

Integrate Ollama to TaskingAI

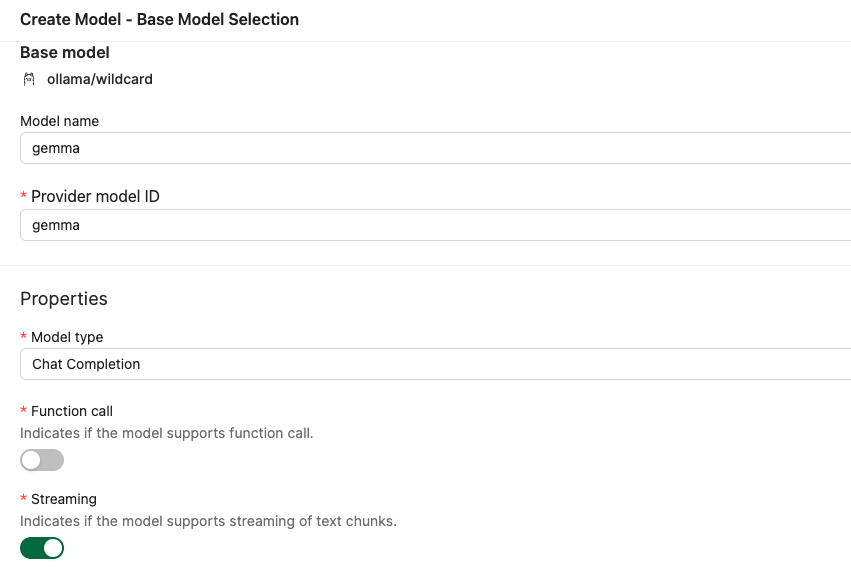

Now that you have a running Ollama service with your desired model, you can integrate it to TaskingAI by creating a new model with the following steps:

- Visit your local TaskingAI service page. By default, it is running at

http://localhost:8080. - Login and navigate to

Modelmanagement page. - Start creating a new model by clicking the

Create Modelbutton. - Select

Ollamaas the provider, andwildcardas model. - Use Ollama service's address

http://localhost:11434asOLLAMA_HOST