Custom Host

If your tasking AI server is locally deployed with Docker, and the target model is also running in your local environment, CUSTOM_HOST_ENDPOINT_URL should start with http://host.docker.internal:port instead of http://localhost:port. Replace port with your actual port number.

Background

In this fast developing AI era, with the industry growing rapidly, there are more and more AI models available on the market. Although TaskingAI is committed to keep integrating AI models from different providers, it is possible that your desired model is not officially supported by TaskingAI.

The Custom Host feature allows you to integrate your self-hosted model or a model from a provider that is not officially supported by TaskingAI.

Models

At the moment, the industry has no agreed standard for input and output schemas of AI models. But in order to connect your model to TaskingAI, you need to make sure that your model can accept and return data in the format that TaskingAI expects.

TaskingAI currently supports two types of schemas from OpenAI for custom models, as their schemas are widely used in the industry:

OpenAI Tool Calls

- Model schema id: custom_host/openai-tool-calls

This schema is the latest version from OpenAI as of the writing of this documentation. A detailed explanation of the schema can be found at OpenAI's official documentation. This schema supports multiple tool calls at a time.

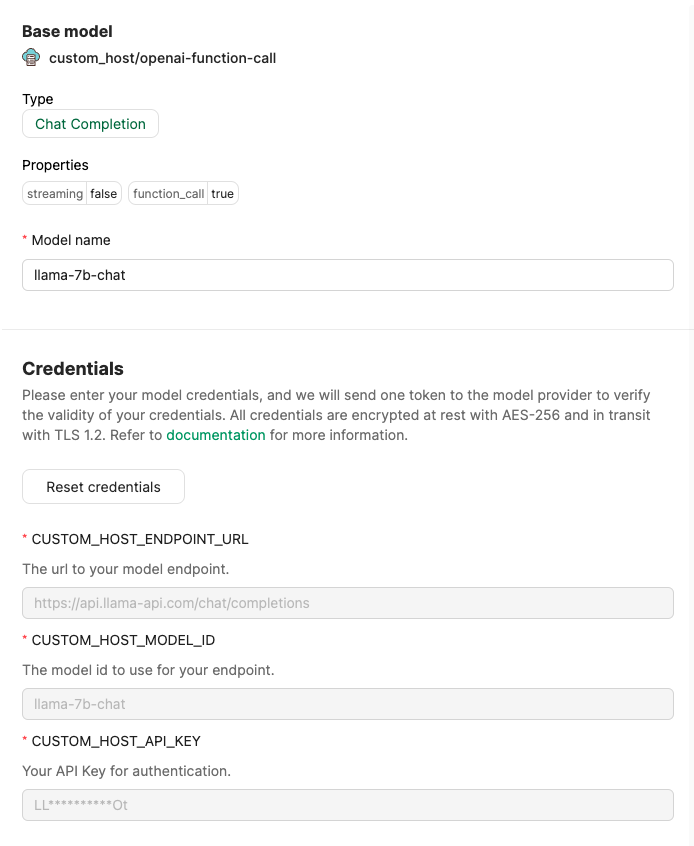

OpenAI Function Call

- Model schema id: custom_host/openai-function-call

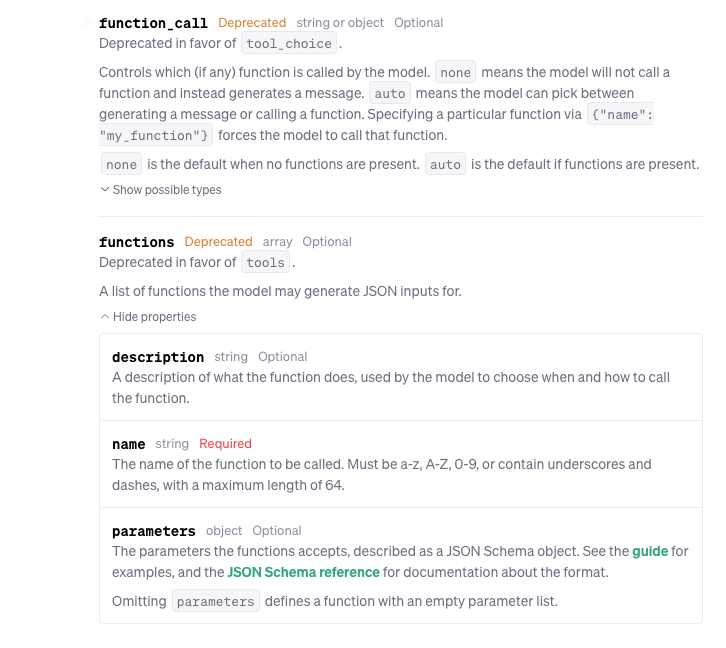

This schema is a deprecated version from OpenAI, but many other providers in the industry applied this schema.

The main difference between openai-function-call and openai-tool-calls is that openai-function-call only supports one function call in one generation response.

You can find the documentation OpenAI's official documentation and expand the deprecated contents:

How to use

To integrate a model using the Custom Host feature, you need to prepare the following information:

- Function call support: let TaskingAI know if your target model supports function calls. This helps TaskingAI to better send requests and resolve responses.

- Streaming support: let TaskingAI know if your target model supports streaming. This helps TaskingAI to better send requests and resolve responses.

- Custom model host url: the endpoint of your self-hosted model or the model from a provider. The url should be accessible from the internet and should be able to accept POST requests.

- Note:The url should be a callable endpoint, not the ip address of the server.

- Model id: the model id that the custom host recognize. This should be provided by the model provider.

- API key: if your target model requires an API key, you need to provide it, allowing TaskingAI to interact with the model.