Knowledge

Many LLM applications require access to user-specific data that is not included in the model's training dataset. A key method to achieve this is through Retrieval Augmented Generation (RAG). During this process, external data is sourced and then integrated into the LLM during the generation phase.

Integrate Retrievals to Assistant

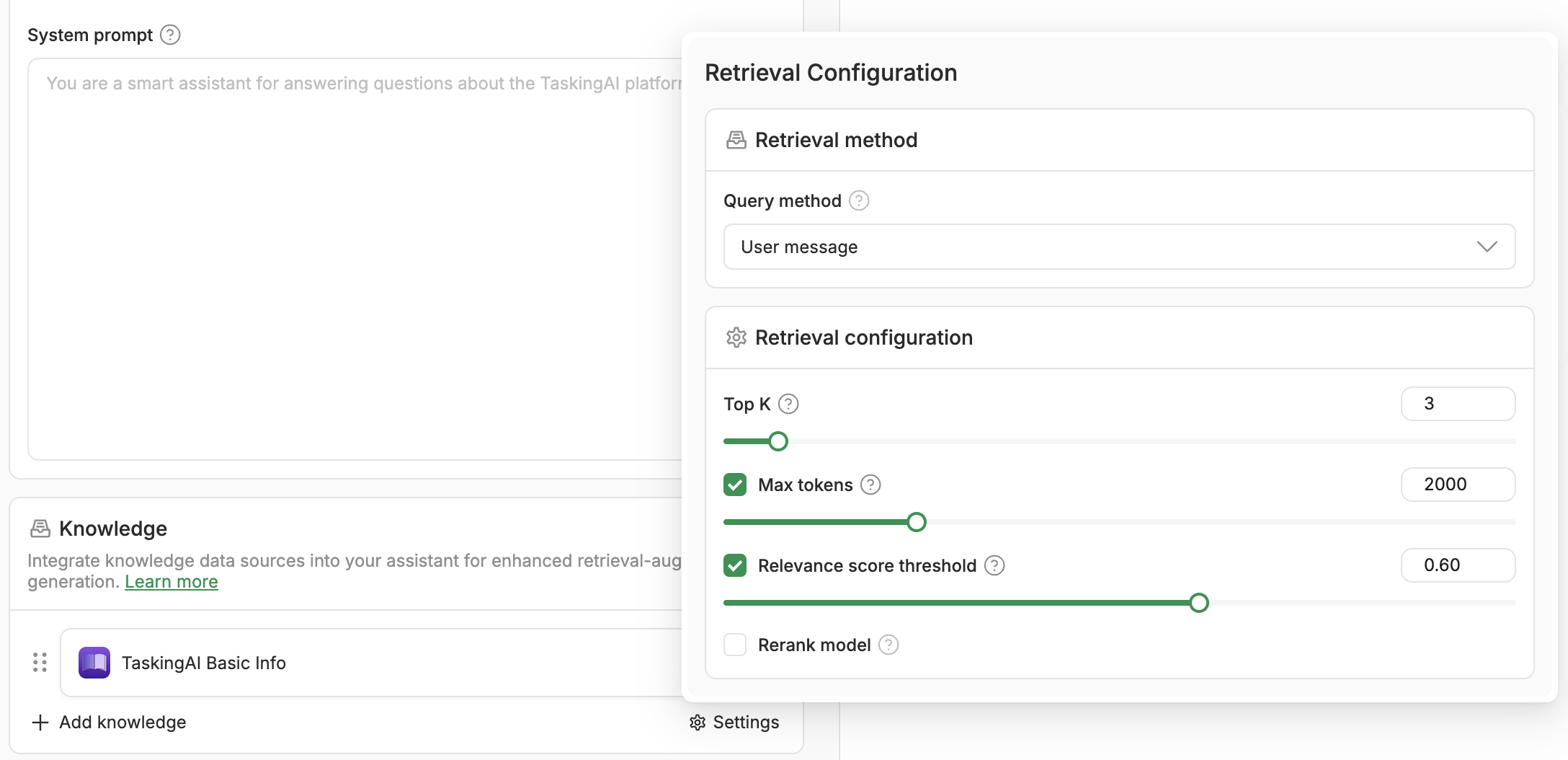

- Enter the

Playgroundof the assistant, scroll down to theKnowledgesection and click the 'Add' button. - From the pop-up window, click the add button at the retrieval collections you want to integrate to the assistant.

- Close the knowledge collection pop-up window and click the 'Save' button to confirm the changes.

Retrieval Configs

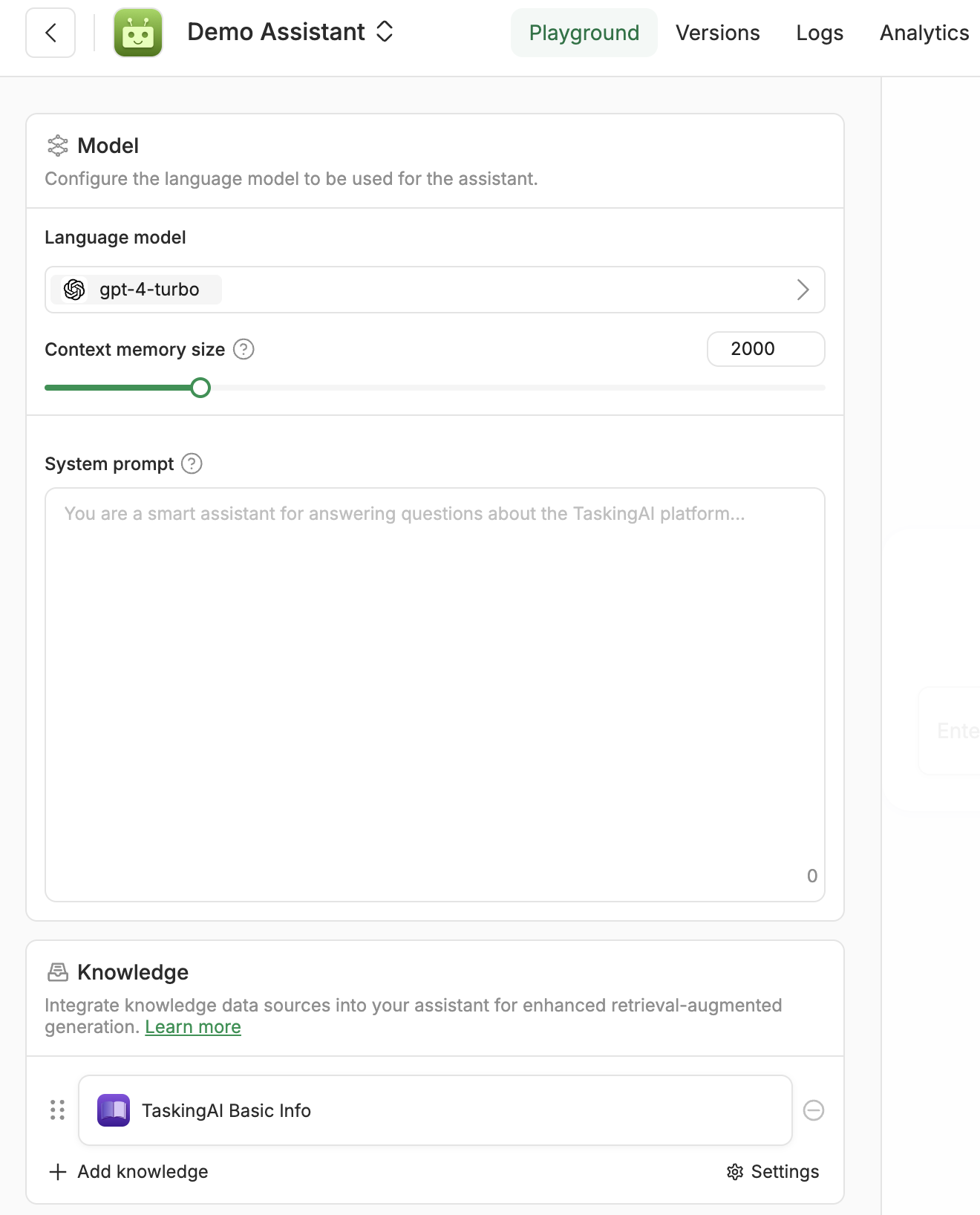

When integrating retrievals to an assistant, you can adjust the retrieval configuration to affect the way knowledge is retrieved. It contains the following fields:

Query Method: When retrieval system is fetching related chunks from vector database, it needs aqueryas input. This field specifies how thequeryis generated.User Message: The latest user message is used asquery.Tool Call: Thequeryis generated by the model, and passed to the retrieval system as a tool call.Memory: Thequeryis the latest user message together with the context in the chat session.

Top K: The number of chunks to be returned from the retrieval task.Max Tokens(Optional): The maximum number of tokens to be returned from the retrieval task.Score Threshold(Optional): The minimum similarity score for the chunks to be returned from the retrieval task. A 0.0 (extremely low relevance) to 1.0 (perfect equivalence) value is expected.Rerank Model(Optional): The rerank model used to rerank the retrieved chunks. The model should be available in theModelstab.Function Description(Only available whenMethodis set toTool Call): The function description for LLMs to call. A default description will be generated based on collection name and description if not provided.