Hugging Face

This document provides information on how to integrate Hugging Face models though Hugging Face's Inference (Serverless) API. For information about integrating with Hugging Face Hub or Hugging Face Inference API (dedicated), please refer to other documents.

Requisites

To use models provided by Hugging Face, you need to have a Hugging Face API key. You can get one by signing up at Hugging Face.

Required credentials:

- HUGGING_FACE_API_KEY: Your Hugging Face API key.

Supported Models:

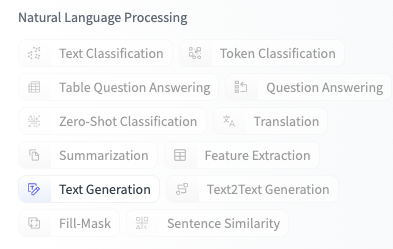

NOTE: Only

Text Generationmodels that are available to Hugging Face Inference (Serverless) API are supported by the Hugging Face integration. To find the latest models under this category, please visit Text Generation Models.

All models provided by Hugging Face has the following properties:

- Function call: Not supported

- Streaming: Not supported

For extra configs when generating texts, different models may accept different parameters, please check your target model's documentation. All the following parameters will be accepted by TaskingAI, but may not take effect if the target model rejects:

- temperature

- top_p

- max_tokens

- stop

- top_k

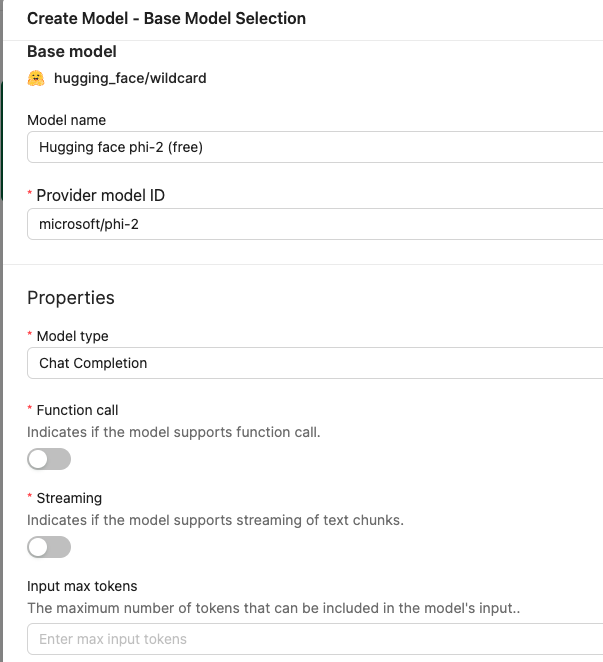

Wildcard

- Model schema id: huggingface/wildcard

Since Hugging Face is a platform that hosts thousands of models, TaskingAI created a wildcard model that can integrate all eligible models on Hugging Face.

Currently, the eligible models are Text Generation models that are available from Hugging Face Inference (Serverless) API.

To integrate a specific model, pass the model id to the Provider Model Id parameter, for example google/gemma-7b, meta-llama/Llama-2-7b-chat-hf.