Llama API

Requisites

To use models provided by Llama API, you need to have a Llama API API Token. You can get one by signing up at Llama API.

Required credentials:

- LLAMA_API_API_KEY: Your Llama API key.

Supported Models:

All models provided by Llama API has the following properties:

- Function call: Supported

- Streaming: Not supported

For extra configs when generating texts, different models may accept different parameters, please check your target model's documentation. All the following parameters will be accepted by TaskingAI, but may not take effect if the target model rejects:

- temperature

- top_p

- max_tokens

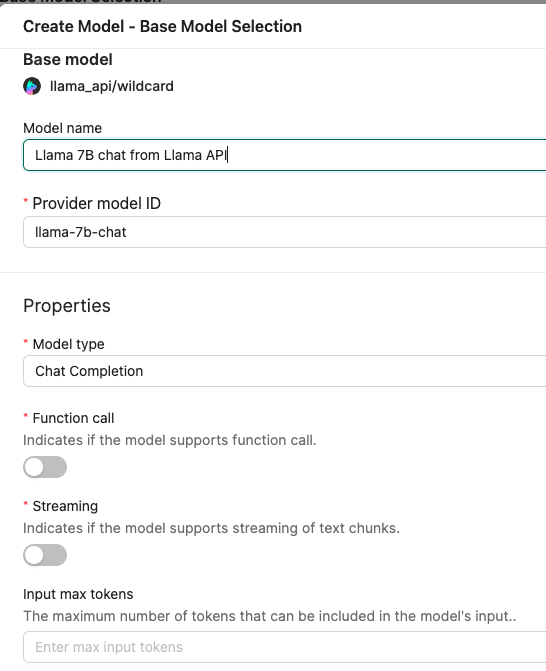

Wildcard

- Model schema id: llama_api/wildcard

Llama-API hosted tens of models of the Llama family with a shared API. TaskingAI created a wildcard model that can integrate all eligible models on Llama API.

When creating a model from Llama API on TaskingAI, you will be asked specify the target provider_model_id.It is the name of your desired model. For an up-to-date list of eligible models, please check Available Models

Example of specifying to use llama-7b-chat model: